Screenreaders, Twitter and OCR

In between living with sink-lessness and unpacking in my old-new home, I find some time to read the #DigiWriMo tweets. After my guest blog post on Digital Inclusion, there were several people who tweeted about the Twitter tips and how my suggestions might begin to have them rethink how they write or tweet. It was good to read about these “wonderings” and to have folks talking about digital inclusion.

@brunowinck @Maha4Learning @yinbk are uppercase chars generally easier on dyslexics? #DigiWriMo #a11y

— ℳąhą Bąℓi, PhD مها بالي 🌵 (@Bali_Maha) November 6, 2015

@drgbz @40houradjunct @NomadWarMachine @scubagogy if the OCR tech exists i wonder if it's been used for #a11y – @yinbk #DigiWriMo

— ℳąhą Bąℓi, PhD مها بالي 🌵 (@Bali_Maha) November 7, 2015

@drgbz @NomadWarMachine @Bali_Maha @scubagogy interested to know. Evernote reads images. Can screenreaders?

— Jenn Kepka (@jennkepka) November 7, 2015

@40houradjunct @Bali_Maha @scubagogy I love being able to take pics of long quotes to get more words, or use pull quote #DigiWriMo #DigPed

— Sarah Honeychurch (@NomadWarMachine) November 6, 2015

From my POV (Point of View), these tweets center on a few key ideas.

- What does a screen reader do? How and what does it read?

- How can we provide text descriptions for images (not readable by screen readers) in a practical doable way in Twitter?

- Is OCR technology (Optical Character Recognition, technology that scans digital images of text to render it into machine-searchable readable text) used for purposes of accessibility?

Screen Reader Technology

Let me state that I’m no screen reader user. I’ve seen a limited number of blind and visually challenged people use screen readers. I’ll point you all to an old (April 14, 2015) blog post I’ve written about how I invited a blind user to test out my Summer 2015 online course. It might address some of the concerns you have about how to tweet anymore if you want to use images.

A quick gist of my old post refers you to 3 major points I highlight in designing a course “worth” learning to ensure accessibility.

First, I have a very short video of 31 seconds on the page that demonstrates how JAWS, a screen reader, first gives a summary of the layout of a webpage. Hence, how you organize your digital content is important. Secondly, I highly recommend that you use the headings offered by the HTML editor, instead of just making your own text “bold” to make it look like a header. In the same way, use the style options offered in Microsoft Word to make your document accessible to screen readers.

Twitter and EasyChirp

Then, my third point in the old blog post talks about how to make images in Twitter readable by screen readers without some of the pointers I summarized from DigitalGov’s website.

To add descriptors to images inserted in tweets, we can use EasyChirp. You can sign in on Twitter through EasyChirp [http://www.easychirp.com/].

I didn’t mention this in my #DigiWriMo post because I am not sure if you are inclined to use another client to tweet. On hindsight, I should have! EasyChirp allows you to add ALT Text (metadata searchable and readable by machines, e.g. screen readers).

I’m including in this post, a few “old” tweets by folks in the #accessibility community who have discussed the issue of ALT text descriptions for images inserted in Twitter.

@NeilMilliken @EasyChirp @dboudreau Thanks for the feedback. It's an important area that is all too often overlooked. #AXSChat

— Ability First (@AbilityFirstLdn) April 14, 2015

@dboudreau @Nethermind Curious if either of you have input on ALT descriptions for @Twitter images? #a11y #accessibility #AXSChat

— Ability First (@AbilityFirstLdn) April 14, 2015

@sarahjevnikar Right. Until @twitter decides to do something about it, EasyChirp is still your best option. /cc @AbilityFirstLdn @Nethermind

— Denis Boudreau (@dboudreau) July 9, 2015

@dboudreau Thanks! @AbilityFirstLdn More: http://t.co/k8uBWkeXhm @Nethermind @twitter

— Dennis Lembrée ⛄️ (@dennisl) April 14, 2015

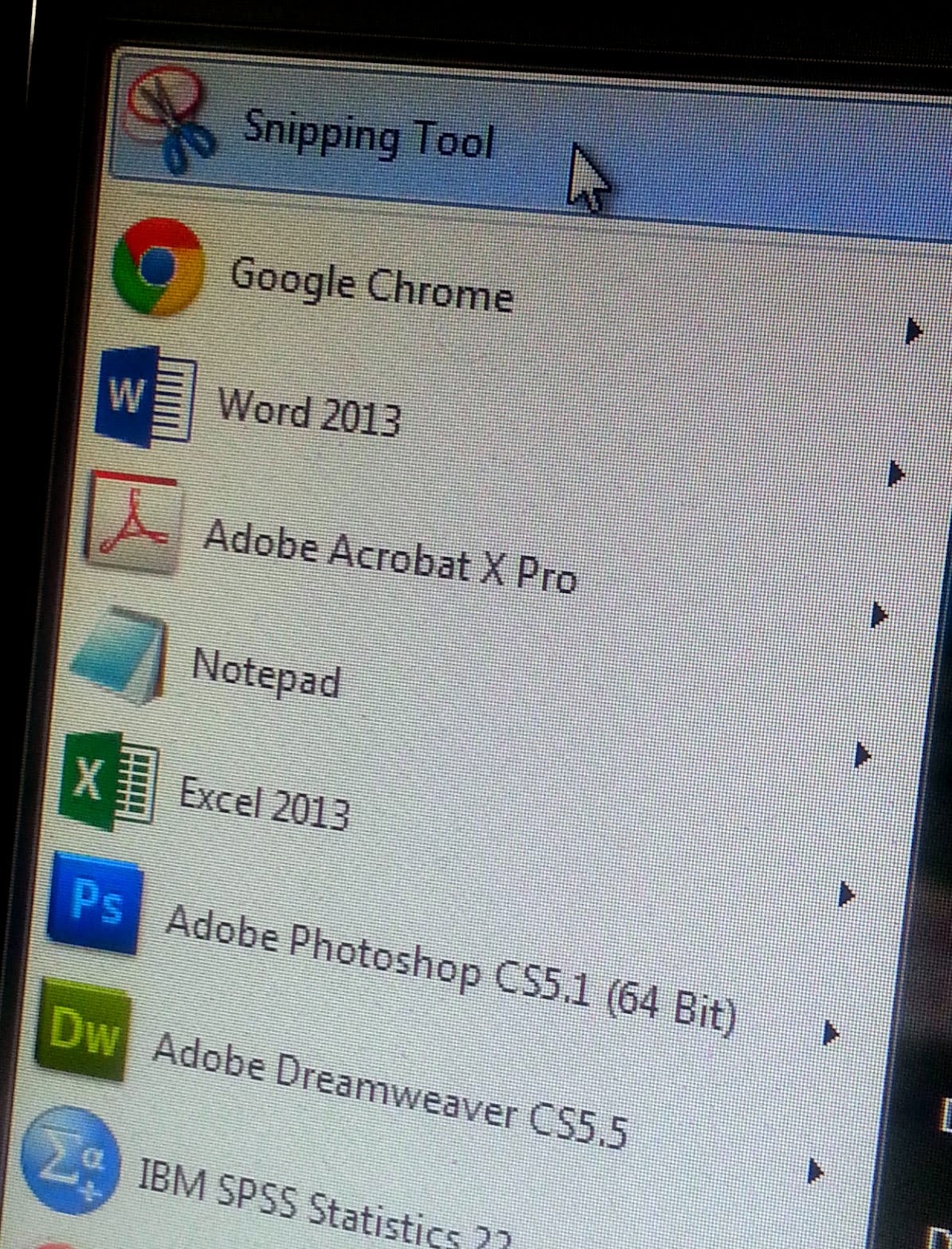

Here’s what it looks like when I signed in to EasyChirp to post a tweet:

See? You have the option to add ALT Text (short description) and a long description (e.g. long quotes on images?).

If you have both clients or more (TweetDeck?) open when you tweet, you might just find this easier than to use Twitter alone and try to implement some of the DigitalGov guidelines, which are all good by the way.

OCR Technology

Maha, you asked a brilliant question! Indeed, OCR technology is used to improve accessibility. I pulled the quote below from Chris Adams’ insightful post (Aug 14, 2014) on making scanned images searchable and readable. (Chris Adams is from the Repository Development Center at the Library of Congress. He is the technical lead for the World Digital Library). Please check out his post for more details.

OCR is the obvious solution for extracting machine-searchable text from an image but the quality rates usually aren’t high enough to offer the text as an alternative to the original item. Fortunately, we can hide OCR errors by using the text to search but displaying the original image to the human reader. This means our search hit rate will be lower than it would with perfect text but since the content in question is otherwise completely unsearchable, anything better than no results will be a significant improvement. (Italics mine for emphasis)

Thank you all for the thoughtful questions and conversation. Keep them coming!