Assessing Student Learning in Online Education Part 1

|

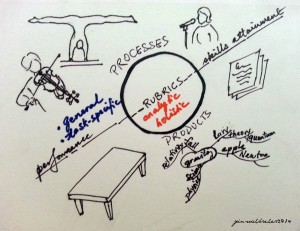

| Assessment types. |

Besides learning engagement, assessing student learning is one of the top concerns (top 3?) of teachers. This burden is no less lighter for online teachers. Much as teachers might like to banish this bane of teaching from their jobs, assessment (evaluation, retention, accreditation, and all related concerns) won’t go away because “measuring” learning and giving a score/grade to course completion is a deeply embedded element of the institutional culture of formal education. How to give a grade meaningfully is a priority of mine.

Recently, the role of rubrics in online learning assessment was (tangentially?) touched on at a meeting. This comment led me to a series of conversations with folks around me. They each offered unique perspectives but I was left unsatisfied due to no fault of theirs. I wondered if I had caught up with the current wave of discourse about assessment in online learning. This blogpost is part of my ongoing efforts to learn more about the latest in online assessment [As a mini literature review, it’s somewhat long, sorry!].

1. There are few empirical studies about assessment in online learning. Most studies in online education have focused on course design, technology implementation and learning outcomes between different modes of instruction, i.e. degree of hybridity/blend, F2F, fully online (Cheng, Jordan, Schallert & D-Team, 2013).2. The nature of online learning has influenced the adoption of grading rubrics:

- Rubrics have been used to provide explicit instructions and clear communication essential for efficient and effective learning across space and time. Proponents of rubric-use argue that rubrics provided by the online teacher serve as a means of explicit guidance to learners as descriptors of the level of performance expected of them.

- Rubric grading is a legacy of traditional face-to-face instruction. Some teachers who are new to online teaching may not be aware that a new approach to teaching is required in the new and different learning environment. OR, they may find assessing online learning formidable with the distance and lack of immediacy in interaction. How they assess traditionally is thus transferred over to their online teaching experience.

3. Online teachers frequently use asynchronous forum discussions as a way to “provide a sense of connection among participants” (Dennen, 2008).

In the online learning environment, no one knows if a student is present unless s/he communicates in some way, through text, image, sound or video. Historically, many teachers have relied on rubrics to assess learning participation and if forum discussions have enhanced learning. Research on asynchronous online discussions has not matched the popularity of this online assessment practice (Cheng et al, 2013).

4. Writing a good set of rubrics is a challenge. What are they anyway? To quote Susan Brookhart, (2013, Association for Supervision and Curriculum Development website & book):

The genius of rubrics is that they are descriptive and not evaluative. Of course, rubrics can be used to evaluate, but the operating principle is you match the performance to the description rather than “judge” it. Thus rubrics are as good or bad as the criteria selected and the descriptions of the levels of performance under each. Effective rubrics have appropriate criteria and well-written descriptions of performance.

Brookhart’s book provides an introduction to different types of rubrics a teacher may consider using.

|

| Types of rubrics (Brookhart, 2013). |

She writes:

When the intended learning outcomes are best indicated by performances—things students would do, make, say, or write—then rubrics are the best way to assess them… Except in unusual cases, any one performance is just a sample of all the possible performances that would indicate an intended learning outcome.

Whether or not to use rubrics in an online course depends on a few factors:

- The purpose of the assessment. What do we want to assess? What is it about the learning experience we value? The process of learning? Learning products? Both? The sum of the learning experience or parts of it?

- Formative and/or summative assessment

- Individual and/or group assessment

Although teachers may use rubrics to assess both the learning process (development / emergence of some mental schema [cognition]) and the final product (written reflections) of asynchronous discussions, it is difficult to develop a robust rubric that fully measures (or captures) how students read, write and engage in online discussions.

5. The use of grading rubrics in assessing online discussions is problematic.

Rubrics, more often than not, are used to grade individual-based expressions or reproductions of knowledge rather than learning (Cheng et al, 2013). Rubrics that grade collaborative work remain a challenge to develop. Even without rubrics, collaborative learning by itself is a complicated endeavor to design and assess (Swan et al, 2006).

Dennen (2008) who has done research on “productive lurking” (An eye-opening concept for me!) argues that learning is an invisible practice, and non-visible learning activities such as lurking can actually be engaging and a learning developmental stage for some students. As they watch how other students learn, they move towards visible learning themselves (Hint of Lave & Wenger’s (1991) Legitimate Peripheral Participation in Communities of Practice? LPP in COP). Teachers are often more likely to assess learners on the written aspect (Dennen, 2008) and not so much on their reading and engagement (while lurking). What evidence might online teachers be looking for?

Moreover, frequency counts (indicative of participation, but not always of learning), content and quality analysis of postings are time-consuming work for teachers, especially those teaching large courses. When students are rewarded a grade for creating a certain number of postings, what sort of message are we sending students? Is low-visibility work such as reading and reflecting less important?

With the increasing use of social media such as blogs and Twitter chats for communication and discussion, blog rubrics have been created by some teachers to motivate students to create blog postings. Some examples: one two three.

My concern about some blog grading rubrics are that they are too task-specific and sometimes, guide students to look for details that are often not what matters most in learning. Not to mention that grading blog postings by the teacher alone is tedious work. When I was a TA in graduate school, I created a spreadsheet to help the instructor keep track of postings so that she could grade them. It was for a class of not more than 20 students who were divided into small groups to ensure they would comment on each other’s posts. What challenges would a course with 800 students present if the teacher plans to grade them for continuous feedback? How many teachers have the privilege of TA help?

6. How can we assess online discussions more holistically?

Dennen (2008, p. 215) suggests using three more analytic methods — besides participation measures and content analysis — to examine online discussions. Note: Dennen examines methods for the assessment and research of online discussions for indication of learning. I am extending her ideas on methods of discussion analysis to include an examination of networked, connected online conversations in these post web 2.0 days:

- structural analysis (refers to “structure” of discussion/conversation; who talks to whom, who has power, includes use of social network analytic method; helpful in noting dynamics of collaborative work; used by itself, this method will not provide teachers with a complete picture of learning)

- microethnography (as the name suggests, it’s likened to ethnographic studies; conversations are explored in their contexts; surveys/polls, interviews, and analysis of digital footprints)

- dialogue analysis (examine interrelatedness of messages and ideas in a dialogue; can be interpretive)

I could see all three analytic methods of online conversations as being beneficial to online teachers, Including an analysis and hence awareness of the social network(s) of learners in their online courses would give teachers a more complete picture of the complexity of learning in their online course communities. Lin and Lai (2013) conducted an intriguing study that combined a traditional online formative quiz with social network awareness. Knowledge of their peers’ social network position, social distance, and willingness to help others relative to the student’s social network position promoted learning achievement.Nonetheless, I understand that teachers are busy people. They do not have to use all three methods, nor try them on a large scale (e.g. with 800 students) for a start, unless they are planning to do research on their online courses. Using a team-based approach to online teaching and/or research might mitigate some of the issues that come with having insufficient time to do “so much.”

7. If not rubrics, or besides rubrics, how else do we know if learning has taken place in an online environment?

How do we communicate explicit expectations of learning that matters? Expectations of interaction, quality and performance to learners so that student projects do not suffer from a lack of effort or quality? Particularly if we incorporate peer assessment into online learning? What if students are new to online learning or new to college?

I’ve been mulling over these questions. My current probably not-very-original thinking is this:

It’s time to move beyond using only rubrics to assess (or grade) online discussion boards in the hopes that students would make postings to indicate engagement. Also, by themselves, online discussion boards with rubrics thrown in are inadequate at assessing student learning.

Recent ideas for alternative assessment practices include the following:

- Courageous Conversation: Formative Assessment and Grading (Andrew Miller)

- Learning Contracts (Dave Cormier)

- Contract Grading (Cathy Davidson)

- How to crowdsource grading (Cathy Davidson, read the comments too)

As a grad student, I’ve written one learning contract, but the teacher did not follow up on it at the end of the course. So, teachers/instructors/professors, whatever you have contracted (a two-way learning effort) with your student to complete, it is only meaningful learning if there is some feedback provided on what you’ve asked your students to do — whether the feedback be from a machine, teacher, student, or member of the public (this is not a guaranteed response though).

With the move towards personalized learning and learner-centered instruction, students are given more opportunities to self-assess and monitor their own learning. However, in the institutional culture of programmatic education and degree completion of studies, student learning assessment is still a HUGE priority in faculty professional development when teachers talk about preparing to teach online.

This blogpost is an expression of my preliminary thoughts on the topic. More reading/conversation is needed and ideas will evolve as I read and synthesize more information.

Articles/Books I read to synthesize ideas:

What are some types of assessment? (Jul 15, 2008). Edutopia Blogpost. Retrieved from http://www.edutopia.org/assessment-guide-description

Lin, J. W., & Lai, Y. C. (2013). Online formative assessments with social network awareness. Computers & Education, 66, 40-53.

Swan, K., Shen, J., & Hiltz, S. R. (2006). Assessment and collaboration in online learning. Journal of Asynchronous Learning Networks, 10(1), 45-62.

One Comment

Pingback: